While I’m happy to see Grand Central in Snow Leopard, I won’t be using it in any of our applications anytime soon because that means we’d have to turn our backs on all those PPC users out there, and everyone who has yet to upgrade to Snow Leopard. I suspect that this represents a sizable chunk of the OS X using population, at least at the moment.

It would also be nice if we could use Clojure for writing Cocoa apps, but Apple decided to drop the ball on that one.

However, that doesn’t mean we still can’t write good, fast, multithreaded code.

Actors and Shared Data

Right now we’re in the process of rewriting parts of Espionage’s helper program, which is fairly multithreaded and does most of Espionage’s heavy-lifting. Currently we are using mutex locks (in the form of NSLock) to synchronize some of the shared data in the application, and while locks are “OK”, they can start to get messy when you’ve got a lot going on.

That’s why we’re going to convert the helper to use actors (courtesy of Plausible Labs‘ great PLActorKit). But even when you’ve structured your code to use the actor approach, you still have a problem if those actors need to operate on data that’s shared with other actors or other threads. This is where locks could come in, but locks tend to suck.

Locks are slow, everyone knows that, but in our experience they can also encourage bad code because you can associate a lock with just about anything, it doesn’t have to be a specific piece of data, it can be a group of actions operating on that data, or data related to it. When you don’t have consistency, things can get out of hand.

So I’ve written a class that aims to solve these two problems with locks.

TESharedObject

When you hold a lock, you prevent any other thread from accessing the data that it’s protecting, regardless of whether that is necessary or not. What if the data that you’re protecting is often only read from? Then despite the fact that it’s perfectly fine for multiple threads to read from a piece of data simultaneously, each reader has to wait in line for the lock to become available. This can really slow things down.

TESharedObject is a replacement for locks that takes this into account. It changes the “lock” paradigm in two ways:

First off, it’s a wrapper around shared data, that is as opposed to a lock, which is just another “thing” that you arbitrarily decide is associated with a piece of data, a decision that you may or may not change your mind about as your code evolves.

The other difference is that unlike a lock, it allows multiple readers to access the data at the same time, provided no one’s writing to it. Databases often take this same approach to improve performance.

Semaphores

TESharedObject implements a basic algorithm using the semaphore primitive. Semaphores aren’t used very often in Cocoa programming, so if you’re unfamiliar with them you’ll be forgiven. Quick overview: a semaphore is an entity that has a count associated with it. You can increase the count by calling, say, “up” on it, or decrease it by calling “down” on it. If you call “down” on it when the count is zero, you block until some other thread increases the count.

So, say we have a database, and we want people to be able to read from it safely simultaneously when no one’s writing to it. By using two semaphores and keeping track of how many people are reading we can accomplish this like so (pseudo-code):

semaphore sRead = 1;

semaphore sAccess = 1;

int readerCount = 0;

reader:

down(sRead);

if (++readerCount==1)

down(sAccess);

up(sRead);

access_database();

down(sRead);

if (--readerCount == 0)

up(sAccess);

up(sRead);

writer:

down(sAccess);

access_database();

up(sAccess);

Here the semaphore sAccess is used as the “lock” on the database, or more accurately, to suspend the next thread that calls “down” on it. Only the first reader will call down on sAccess. A second semaphore sRead is used as a backup to sAccess in the situation that another reader is already suspended on sAccess.

The code for the writer is simple, all writers decrement sAccess‘s count, meaning a single writer is enough to stop everyone.

Building A Better Lock

Now that we have the pseudo-code, we need a design for our lock, and to get the design we need to have some sort of an idea of how we plan on using this lock in practice. I know! It should look something like this:

NSMutableString *sharedData = ;

TESharedObject *superLock = ;

reader:

NSObject *obj = ; // like "lock"

NSLog(@"We've got an object! Take a look: %@", obj);

; // like "unlock"

writer:

NSMutableString *aStr = ;

;

;

There, that looks pretty good. Our superLock is bound to the data it’s protecting. When we want to have a look at the data we call -borrowForReading to “borrow” it, and once we’re finished with it we “return” the data by calling -returnObject. Simple enough, and it works just like using a lock. All we have to do is make sure that we don’t write to the data. If we want to write to it, we call -borrowForWriting instead.

Let’s have a look at what’s inside.

-borrowForReading

- (id)borrowForReading

{

semaphore_wait(sRead);

if ( ++readerCount == 1 )

semaphore_wait(sAccess);

semaphore_signal(sRead);

return obj;

}

There’s our pseudo-code! Well, about half of it, I bet you can guess where the other half is. But before we get to that, let’s take a look at -borrowForWriting:

-borrowForWriting

- (id)borrowForWriting

{

semaphore_wait(sAccess);

writing = YES;

return obj;

}

Here the code diverges a bit with the introduction of a new variable writing. We use it so that whether we called -borrowForReading or -borrowForWriting, we only have to call:

-returnObject

And that’s it. We’re almost done now, if you’ve made it here, thanks for sticking with me. I only have two more things to show you, and I think it’ll be worth it.

TESharedMap

Another aspect of shared data that we’ve neglected to address is the notion of “globality”. Yes, I did just make that word up, but it has important consequences! When you’re dealing with shared data, you’re often dealing with global variables, and dammit, now you’ve gotta find a place to put them!

A lot of people just put them at the top. They make long laundry lists of static declarations at the top of some file, and for each piece of shared data two declarations are required: the data, and the lock for the data. This can get kinda ugly, and ugly code is often harder to read and maintain. Our TESharedObject suffers from this same problem, it’d be nice if we could just focus on the data and not have to deal with the lock that’s associated with it.

We can get close to this with TESharedMap. TESharedMap acts as a “summoner”, we tell it: “Give us our object!” And it does. We don’t need to worry about keeping track of the associated TESharedObject, TESharedMap handles that for us. Put something into the map and it magically becomes thread-safe, so long as you remember to retrieve it only through the map.

Here’s its interface:

Benchmarks

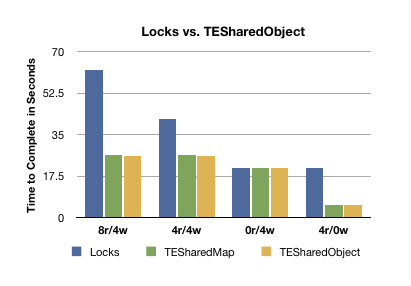

What are the performance benefits of using TESharedObject and TESharedMap instead of NSLock and the like? For this I’ve written 3 programs, they each do the same thing, the only difference is that each uses a different synchronization primitive that we’ve discussed (TESharedObject, TESharedMap, and NSLock).

Here’s the TESharedMap version:

static int reader = 0;

static int writer = 0;

static int msgIdx = 0;

static int tCount = NUM_READERS + NUM_WRITERS;

static NSString *msgs = {

@"Hello World!", @"how are you?", @"random message!", @"hope we have enough of these...",

@"I'm sure we will", @"there so many!", @"How many messages does it take", @"to screw in a lightbulb?"

};

int

{

NSAutoreleasePool *pool = ;

NSMutableString *message = ;

;

for (int i=0; i<NUM_READERS; ++i)

;

for (int i=0; i<NUM_WRITERS; ++i)

;

// wait for threads to terminate...

while (tCount)

sleep(1);

printf("good-bye\n");

;

return 0;

}

The program launches a certain number of readers and writers, and they each access an NSMutableString a certain number of times. To simulate computation, each reader and writer sleeps for a certain amount of time upon accessing the string. The number of readers and writers, as well as other parameters, can be adjusted by modifying the “Config.h” file:

// play with these parameters

I ran 4 benchmarks using the *NIX ‘time’ command comparing the 3 synchronization primitives against each other by adjusting the number of readers and writers and keeping the other parameters constant.

Dramatic Results

First we see that the extra layer that TESharedMap adds on top of TESharedObject is pretty much negligible in terms of performance.

The results for 0r/4w is the worst-case scenario for TESharedObject (no readers), and as expected it performs pretty much exactly like the typical lock.

The results for 4r/0w is the best-case scenario, when there are only readers accessing the data. This is the real payoff, almost no penalty for accessing shared data! You can’t get this with mutex locks.

The other two results show what happens when you have both readers and writers, in which case TESharedObject quickly overtakes the mutex lock, but what’s most interesting is that as you add more readers, TESharedObject takes a fairly negligible hit while the lock’s performance is significantly degraded. Why?

The reason becomes pretty obvious if you actually run these tests yourself. What happens is that the readers dominate the lock. This happens because in this program, the data is besieged by a constant and unrelenting stream of readers and writers who lust after the data until they’ve had their fill. In this situation, the more readers you add, the less likely it becomes that a writer will be able to get a hold of it, so what happens is that there’s suddenly a stampede of readers with virtually unfettered access to the data which they quickly gobble up, and then after most or all of the readers have had their fill the writers get their turn.

So while TESharedObject can provide a significant performance boost, if your lock is highly contested by readers they can shut out any writers. In most of the situations that I’ve seen locks used, this doesn’t happen. But if you are using a TESharedObject in a maelstrom like this, you’ll probably want to subclass it and modify the -borrowForReading method so that it sleeps if the readerCount is too high, which will make it a bit slower, but it will still be at least as fast as a lock, and you’ll have better looking code.

I think that’s it for now. All the code in this post, including the code for TESharedObject and TESharedMap, is provided under an BSD-style license and can be downloaded by clicking the icon below:

Enjoy! 😉

– Greg (twitter: @taoeffect)

Thank you very much for sharing! It helps me a lot!